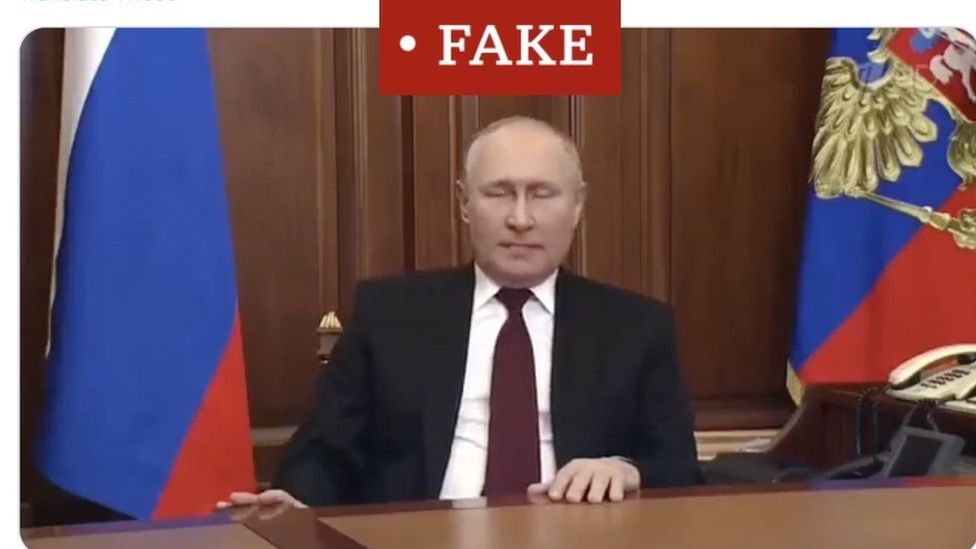

OTHER Image caption, The deepfake appeared on the hacked website of Ukrainian TV network Ukrayina 24

By Jane Wakefield

BBC Technology

A deep fake video shared on Twitter, appearing to show Russian President Vladimir Putin declaring peace, has resurfaced.

Meanwhile, this week Meta and YouTube have taken down a deep fake video of Ukraine’s president talking of surrendering to Russia.

As both sides use manipulated media, what do these videos reveal about the state of misinformation in the conflict?

And are people really believing them?

The unconvincing fake of President Zelensky was ridiculed by many Ukrainians.

Volodymr Zelensky appears behind a podium, telling Ukrainians to put down their weapons. His head appears too large for and more pixelated than his body – and his voice sounds deeper.

But the Ukrainian Center for Strategic Communications had warned the Russian government may well use deep fakes to convince Ukrainians to surrender.

An ‘easy win’ for social media

IMAGE SOURCE, OTHER

IMAGE SOURCE, OTHERIn a Twitter thread, Meta security-policy head Nathaniel Gleicher said it had “quickly reviewed and removed” the deep fake for violating its policy against misleading manipulated media.

YouTube also said it had been removed for violating misinformation policies.

It had been an easy win for the social-media companies, Nina Schick, author of the book Deepfakes, said, because the video was so crude and easily spotted as fake even by “semi-sophisticated viewers”.

“The platforms can make a big hoo-ha about dealing with this,” she said, “when they aren’t doing more on other forms of disinformation.

“Even though this video was really bad and crude, that won’t be the case in the near future.”

And it would still “erode trust in authentic media”.

“People start to believe that everything can be faked,” Ms Schick said

“It is a new weapon and a potent form of visual disinformation – and anyone can do it.”

The many faces of deep fake technology

IMAGE SOURCE, MY HERITAGE

IMAGE SOURCE, MY HERITAGEA deepfake tool letting users animate old photos of relatives has been widely used and the company behind it, MyHeritage, has now added LiveStory, which allows voices to be added.

Some were impressed by how realistic it was, others were concerned the real Kim Joo-Ha would lose her job.

Deepfake technology is also being used to create pornography, with a proliferation, in recent years, of websites letting users “nudify” pictures.

And it can be used for satirical purposes – last year, Channel 4 created a deep fake Queen to deliver an alternative Christmas message.

The use of deep fakes in politics remains relatively rare.

But a deep fake former US President Barack Obama has been created, to demonstrate the technology’s power. When detection goes wrong

“The Zelensky was a best-case deep fake problem,” Witness.org. program director Sam Gregory said.

“It was not very good, for a start so was easily detected.

“And it was debunked by Ukraine and Zelensky had rebutted it on social media, so it was an easy policy takedown for Facebook.”

But in other parts of the world, journalists and human-rights groups feared they had neither the tools to detect nor the ability to debunk deep fakes.

Detection tools analyse the way a person moves or looks for things such as the machine-learning process that created the deep fake.

But last summer, an online detector suggested a genuine video of a senior politician in Myanmar, apparently confessing to corruption – debate remains over whether it was a real statement or a forced confession – was a deep fake.

“The lack of 100% proof either way and people’s desire to believe it was a deep fake reflects the challenges of deep fakes in a real-world environment,” said Mr. Gregory.

“President Putin was made into a deep fake a few weeks ago and it was widely regarded as satire – but there is a thin line between satire and disinformation.”

Analysis by Shayan Sardarizadeh, BBC Monitoring

The transcript of Mr. Zelensky’s deep fake first appeared on the ticker of Ukrainian TV network Ukrayina 24 during a live broadcast on Wednesday.

A screenshot and full transcript later appeared on its website.

Ukrayina 24 confirmed both websites, inaccessible for most of Wednesday, and ticker had been hacked.

The video was then widely shared on Russian-language Telegram and Facebook equivalent VK.

From there, it made its way to platforms such as Facebook, Instagram, and Twitter.

There have been warnings deep fakes could be dangerous, including in a conflict, for some time.

But creating a believable deep fake is costly and time-consuming.

And old videos and doctored memes remain the most common and effective misinformation tactic in this war.

Neither well made nor believable, the Zelensky deep fake is among the worst I’ve seen.

But the fact a deep fake has now been made and shared during a war is notable.

The next one may not be as bad.

-

‘Deepfake is the future of content creation

8 March 2021

-

MP wants AI ‘nudifying’ tool banned

4 August 2021